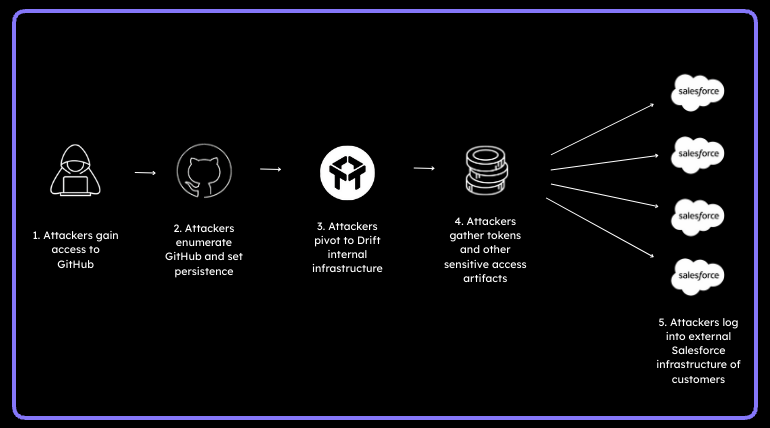

There’s been a lot of news circulating about the Salesforce breach and the cascading (potentially) breaches that resulted. What’s interesting is that this high-profile breach wasn’t executed by a well known APT group. Google Threat Intelligence tracks the cluster of events as actor ‘UNC6395’ but attribution remains currently still contested by some. There will more than likely be more breaches that occur as a result of this attack. In this post we will attempt to walk through how it occurred with publicly available information and also explore the why and how, from the lens of an attacker, decisions were made.

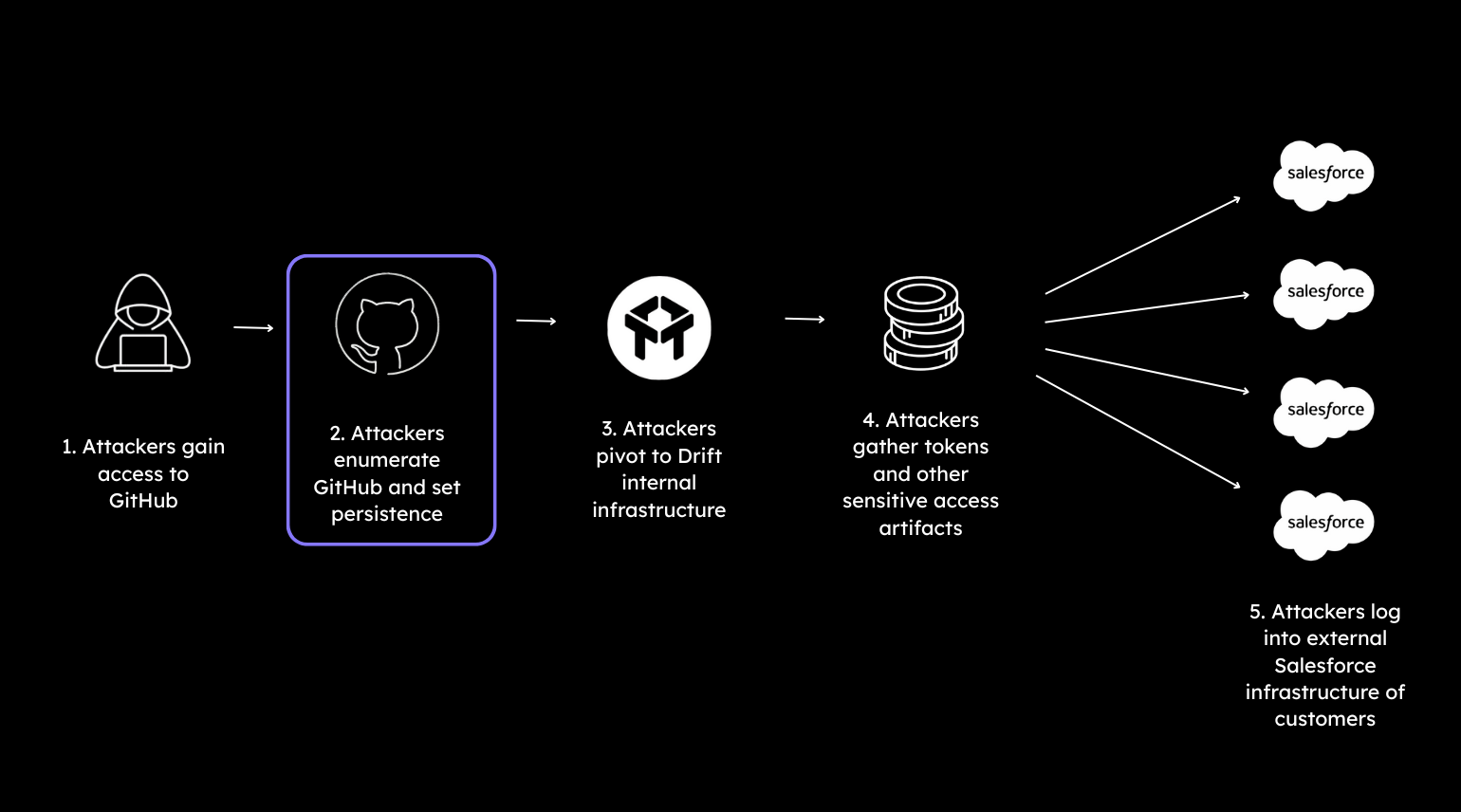

Stage 1 – GitHub: The First Foothold

The initial foot-hold is estimated to have begun in March, with the attackers compromising Salesloft’s GitHub account.

Fig 1: Initial Access on path

Adversaries maintained access for months supposedly, performing a number of actions: recon, creating automation/workflows, and even setting up a guest user account for persistence for access. From that access, they obtained sensitive credentials and integration material (e.g., OAuth tokens, AWS keys, passwords).

Fig 2: Enumeration Phase

Of note here is that with such a long duration of access, along with deep interaction with the GitHub account, it’s alarming that it was not detected. It is extremely difficult, it would seem that even in modern enterprises, to accurately assess risk and effectively monitor GitHub, and other SaaS applications, for small-to-medium enterprises and startups. In my own previous adversary simulations, GitHub access was almost always a given as an easy target with hardly any detection capabilities.

How Attackers Gain Access to GitHub

There are a number of ways that adversaries can gain access to enterprise GitHub accounts for accounts like this, with most of them being items already on most organization’s minds. Phishing, by way of endpoint compromise (traditional phishing), phishing by way of a cred capture portal (ala modlishka or evilginx2), vishing, password reuse (from other breaches), spraying (with a lack of MFA), etc. This was most likely the real security failure that allowed for the cascading events to occur. A number of methods exist to gather information that give a great deal of information on companies. Tools like RocketReach, ZoomInfo, Linkedin, etc. all allow attackers to identify targets for an organization and utilize social media footprints to craft accurate pretexts based on identifying demographic information.

I have personally used tools like the above mentioned in enumeration of an organization. You can find great information in them and outside of the obvious of a direct hit you can also glean information on your target: phone number ranges, email address formats, workforce distribution, etc.

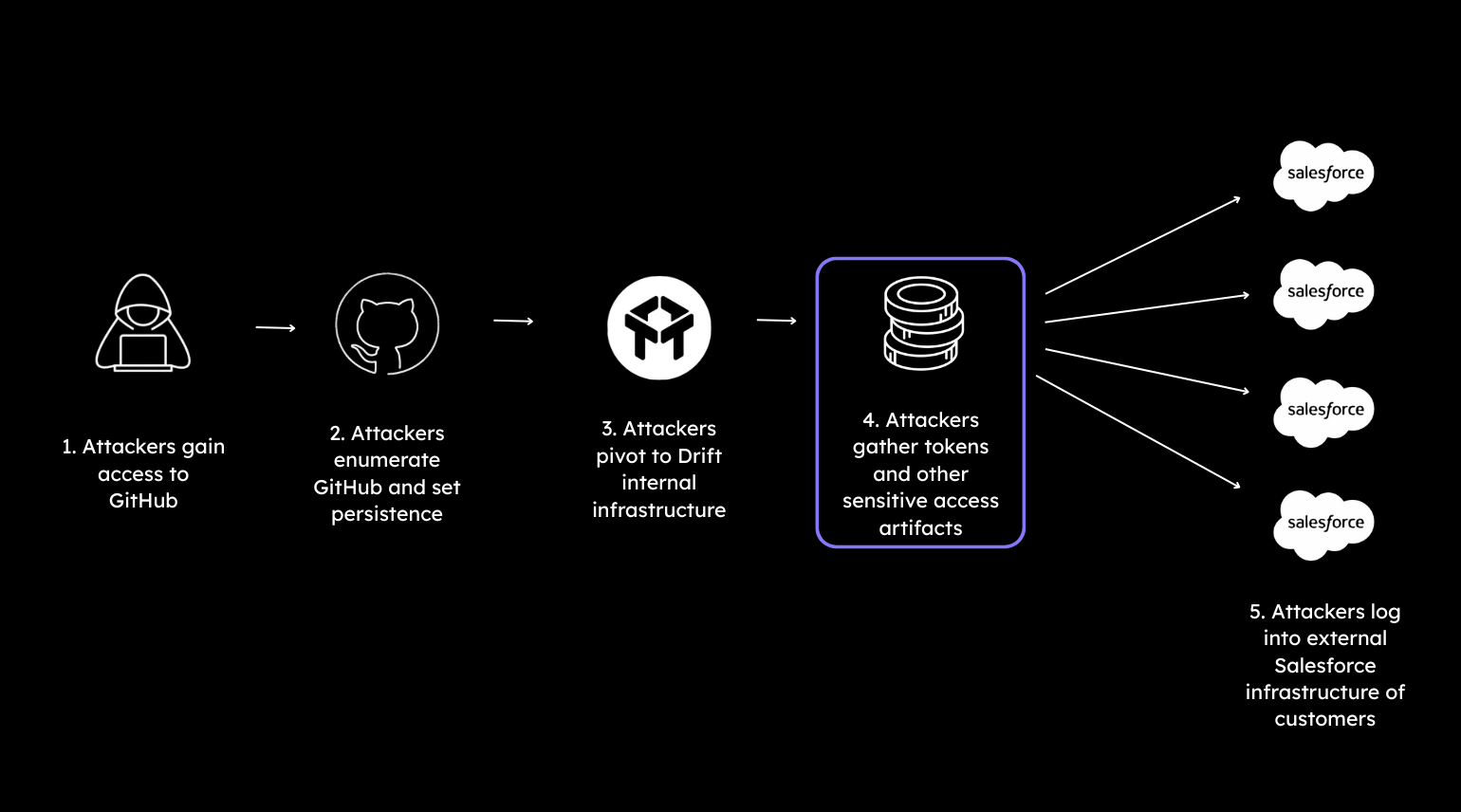

Stage 2 – From Code Repo to Drift

After the attackers compromised the GitHub account, had set some degree of persistence, and had collected credentials, they moved on to targeting the actual Drift application.

Fig 3: Drift Access

Using secrets from the GitHub breach (although some write-ups state that the Drift application abuse occurred at the same time), the actors accessed the actual infrastructure and data tied to Drift. This included their OAuth tokens used by customer orgs to connect Drift with Salesforce (and, in some cases, Google Workspace via Drift Email).

Fig 4: Salesforce Token Collection

The primary target for the harvesting of information with said access, according to GTIG, was to harvest credentials for further access in other organizations. We are seeing that play out exactly as predicted by way of recent breaches popping up with supposed links to the Salesforce/Drift breach. This sort of approach isn’t anything new, as we have seen attackers do the same thing in the past by way of supply chain compromises, targeting third parties who integrate, etc. As a standard tactic in offensive security, compromising systems can provide further access once pillaged.

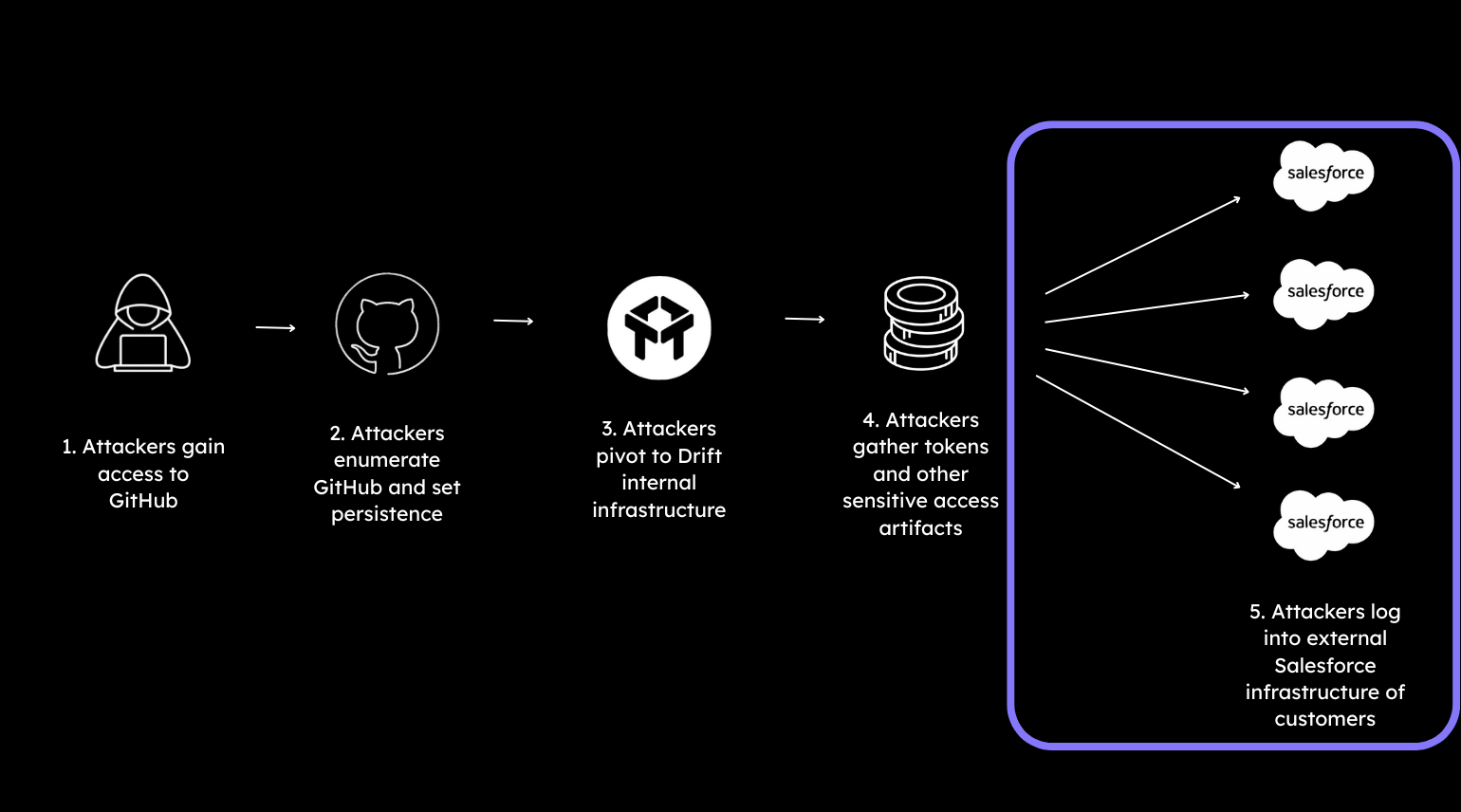

Stage 3 – Salesforce Exploitation

The next phase in the attack began the actual utilization of the pilfered OAuth tokens and targeting of connected customer Salesforce instances.

Using the valid Drift issued tokens scoped to customers’ Salesforce instances, the actors connected and exported large volumes of data across multiple connected companies.

Stage 4 – Detection and Containment

With regards to actual detection and containment, the actions executed were swift and followed a standard process. The campaign was shut down roughly Aug 18, 2025 with broad revocations and app disablement cut off access. Salesloft issued a public advisory for customers on August 20, 2025. Many victims then revoked Drift, rotated tokens, and initiated forensics internally to try and identify if they were impacted. Typically bulk rotation of identified credentials (as well as other credentials assumed to have been associated potentially) is executed. This is usually very swift, in an effort to prevent any sort of regeneration of tokens, keys, or other aspects of access. Similarly, key investigations on what actions were executed by the attacker (in an effort to identify persistence methods, other forms of access, or any other form of damage) are run.

Public disclosures to date include Zscaler, Palo Alto Networks, Cloudflare, Tanium, SpyCloud, Qualys, Tenable, and more to come. Most report Salesforce data theft and not core-infrastructure compromise. While this might seem like a silver-lining, remember the data in Salesforce is the beating heart of enterprise application data: contracts around purchases, users and their information, process data, etc. All of this can be sold on forums for great profit or used by other threat actors for further compromises. GTIG, as well as other independent researchers, warn the impact extends beyond Salesforce where Drift integrations existed (e.g., limited Google Workspace access via Drift Email in some tenants).

As we discussed earlier, there were a number of things that worked in the favor of UNC6395:

- Weak detections around GitHub provided a safe initial foothold to pillage credentials. The secrets that were placed in controlled repositories allowed for the pivot.

- There were a lot of grants and trusted applications linked without any segregation or ACL’ing down of the OAuth scope.

- A lot of activities were executed with trusted tools and were API native which created a “normal” perspective of activities.

- A lack of strong alerting around APIs, identities, or CICD actions.

Attacker TTPs Observed

Some of the TTPs we observed in the attack:

- Credential harvesting from code repos and reuse of app secrets and tokens:

- Credential Access → T1552.001 Unsecured Credentials: Credentials in Files (secrets in source/config), T1528 Steal Application Access Token (grabbing OAuth/API tokens), Defense Evasion / Lateral Movement → T1550.001 Use Alternate Authentication Material: Application Access Token.

- Credential Access → T1552.001 Unsecured Credentials: Credentials in Files (secrets in source/config), T1528 Steal Application Access Token (grabbing OAuth/API tokens), Defense Evasion / Lateral Movement → T1550.001 Use Alternate Authentication Material: Application Access Token.

- OAuth legitimate app authorization to pull data through normal Salesforce APIs:

- Persistence → T1671 Cloud Application Integration (malicious/abused OAuth app integration in SaaS), T1078.004 Valid Accounts: Cloud Accounts (using authorized cloud identities), and Defense Evasion/Credential Access → T1550.001 Application Access Token. For pulled data: Collection → T1213.004 Data from Information Repositories: Customer Relationship Management (CRM) Software.

- Persistence → T1671 Cloud Application Integration (malicious/abused OAuth app integration in SaaS), T1078.004 Valid Accounts: Cloud Accounts (using authorized cloud identities), and Defense Evasion/Credential Access → T1550.001 Application Access Token. For pulled data: Collection → T1213.004 Data from Information Repositories: Customer Relationship Management (CRM) Software.

- Persistence via guest user creation and workflow modifications::

- Persistence → T1136.003 Create Account: Cloud Account (guest user), T1098 Account Manipulation (e.g., adding roles/permissions: T1098.003 Additional Cloud Roles / T1098.001 Additional Cloud Credentials), and often T1671 Cloud Application Integration if automations were implemented. If workflows/webhooks were used to exfiltrate data, that also lines up with Exfiltration → T1567.004 Exfiltration Over Webhook.

- Persistence → T1136.003 Create Account: Cloud Account (guest user), T1098 Account Manipulation (e.g., adding roles/permissions: T1098.003 Additional Cloud Roles / T1098.001 Additional Cloud Credentials), and often T1671 Cloud Application Integration if automations were implemented. If workflows/webhooks were used to exfiltrate data, that also lines up with Exfiltration → T1567.004 Exfiltration Over Webhook.

- High-value exfiltration of customer information, commercial details, support cases, and other juicy CRM artifacts:

- Collection → T1213.004 Data from Information Repositories: CRM Software (targeted pulls), then Exfiltration → T1567 Exfiltration Over Web Service (and relevant sub-techniques such as .002 Cloud Storage or .004 Webhook, depending on the destination). If scripted/periodic, we could tag T1020 Automated Exfiltration and/or T1029 Scheduled Transfer.

Lessons for Defenders

With all of the above information, what can defenders do to help protect against similar attacks? There are a fair number of well known patterns to help stop an attacker (or if you are part of a mature organization, a Pentester or Red Teamer… you are Red Teaming your organization right?).

First, organizations need to scan and purge secrets from GitHub. I can not express how often credentials are found in GitHub or Confluence. There is a saying in the Red Team community “Creds are in GitHub” and “Check Confluence”. While intended to be a cheeky saying, it underscores how lax most orgs are with secrets and ignore security in favor of deployment timelines. There have definitely been instances where myself, or operators on our team, were able to access critical systems (including SIEMs!) because a script utilized a cleartext (or base64 encoded) password.

Next, it is strongly recommended to enforce MFA and least-privilege access policies. These are basic security things! Everyone should be using MFA in the current era due to the protections it offers over simple password issues. It mitigates a lot of low-effort attacks and adds an extra step for attackers to bypass if credentials are obtained. Least privilege’ing down access is also a basic security hygiene thing that should be done by default as it mitigates pivoting and movement.

MFA gives defenders time to detect and take action, while also serving as a barrier of entry for attackers. If I am on an operation and discover credentials, but also know that MFA is used, I am going to take time to be selective about actions I take next or what systems I am going to attempt to auth to. This is because everything you do on the wire is logged, typically somewhere, and ultimately gives breadcrumbs to defenders to identify if someone is failing authentication which could also allow for investigation. Forcing attackers to put their weight on their backfoot gives defenders time to investigate and identify IoCs. Distance to objective is time and the breach game is all about time!

Given the nature of the breach, I would strongly recommend also having a governance program in place to inventory connected applications and their respective secrets (e.g. API keys, OAuth tokens). This includes rotation of secrets, processes to revoke secrets if identified to have been leaked or breached, validation of the lifecycle of keys or tokens, and scoping of tokens access to least privilege within the organization.

Identity security, or at a minimum SaaS telemetry alerting, is critical for any sort of detection or defensive action for this style of modern breach. There is a distinct need to alert on bulk exports, unusual API execution, and other strong outliers to standard user actions. This is a real pain point for most SOC/IR teams as it is very difficult to write strong detections with commonly available SIEM or SOAR tooling around SaaS identities and applications.

In the field, SaaS applications (especially after a simple token theft or cred capture via a capture portal) were seen as a safe harbor to execute from for Red Teamers. This was because it is a well known secret that detections are typically very poor for API calls and other SaaS activities. Concurrent sessions, for example, are a consistent issue due to developers needing: multiple sessions to test things, multiple tabs open, the need to execute things in batch while logged into a GUI, etc. This means that as a Red Teamer I know that I can operate as the developer’s identity/token without any real fear of detection and throw stealth out the window.

There are other soft spots (like VPN connections, browser pivots/extensions) but for the sake of this breach it is worth calling out because attackers are shifting away from a typical model of: deploy malware, c2 infrastructure callback, pivot, action on objective, in favor of living in SaaS and using LOLBins, GTFOBins, etc. This makes detections harder since there aren’t the atypical WinAPI calls, or whatever signal, that is typically detected on.

Summary

There needs to be a shift of focus from “protect the endpoint and domain controller” to “protect high value applications in a blended/hybrid environment”. The idea that we “patch and alert” on “vulnerabilities and flaws” that audit or an annual pentest identified is also flawed as it doesn’t think cohesively about the environment and the value an org presents to an attacker. Shifting to an attack path mentality, focused on “what are the most valuable crown jewels we need to protect” will drive better awareness of a realistic defensive posture. This should, in a healthy org, include a zero-trust model for things included in the supply chain. Some of this will require uncomfortable conversations and read-outs from offensive security consultants and teams but it is absolutely critical for enterprises to shift this thinking forward in order to prevent future breaches like the Salesloft Drift instance.

Tune into our Webinar: Breaking Down the Salesforce/Drift Breach – An Attacker’s POV on October 14th at 12pm ET to learn more.